It’s frustrating when you’re enjoying a long, fleshed-out roleplay and the LLM starts forgetting important details or acting out of character. It breaks your immersion and makes continuing a roleplay you’re invested in feel like a chore.

Increasing your Context Size and dumping information isn’t a good option. You need to optimize your context cache to maintain an immersive and engaging experience. SillyTavern provides multiple tools to help you manage long chats, making it easy to enjoy long roleplays where the LLM doesn’t forget important details and remains coherent.

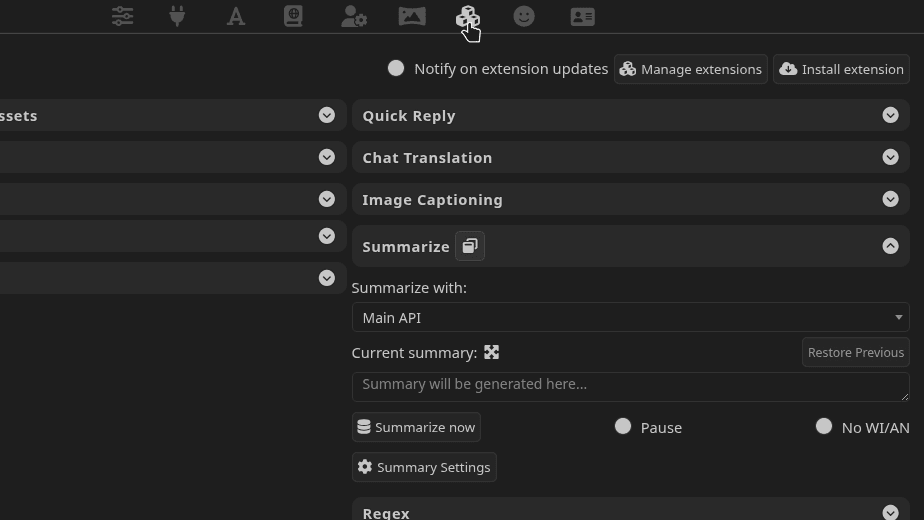

Use The Summarize Extension

Using the built-in Summarize extension is the easiest way to optimize your context cache for long chats on SillyTavern. It lets you generate summaries using an LLM or manually enter them.

While it is the easiest tool to use, LLM-generated summaries can sometimes miss important details or include unnecessary information. Smaller models aren’t as good at generating summaries compared to larger models like DeepSeek. However, even a large model like DeepSeek can miss including important details necessary for roleplay continuity.

You can access the Summarize extension in SillyTavern’s Extensions panel (stacked cubes icon). Clicking “Summarize now” generates a summary (when a chat is open), and clicking “Summary Settings” lets you configure options and enable automatic summary updates.

Configuring The Summarize Extension

SillyTavern’s documentation provides detailed explanations of what each configuration setting does.

Summarize With

Select Main API. The extension will use your current backend, model, and settings to generate summaries. For example, if you’re using an 8B model with KoboldCpp, that same model will generate your summaries.

Prompt Builder

Controls how SillyTavern builds the summary prompt. The default “Classic, blocking“ should work in most cases. You can learn more about how the three different methods work in SillyTavern’s documentation.

Summary Prompt

You can customize the prompt that SillyTavern uses for generating the summary. SillyTavern’s default prompt works well in most cases, but you can modify it to provide further instructions to the LLM.

- Target summary length: You can set the desired length of your summary (in words) and use the {{words}} macro in the summary prompt. The option isn’t a hard and fast rule, and the LLM may generate a summary that is shorter or longer than your specified length.

- API response length: You can override the global response length (in tokens) that you’ve set in your AI Response Configuration settings. For example, this setting is useful when your global response length is 150 tokens for shorter LLM responses in chat, but you might not want to restrict the summary to 150 tokens.

- Max messages per request: Only works with “Raw, blocking” and “Raw, non-blocking” prompt builder options. You can define the maximum number of messages the LLM should summarize during each generation.

Update Frequency

These settings control how often summaries are automatically generated and updated. You can define how many messages or words SillyTavern processes before generating a new summary. Ideally, the first summary should be automatically generated before the initial messages drop out of the context window.

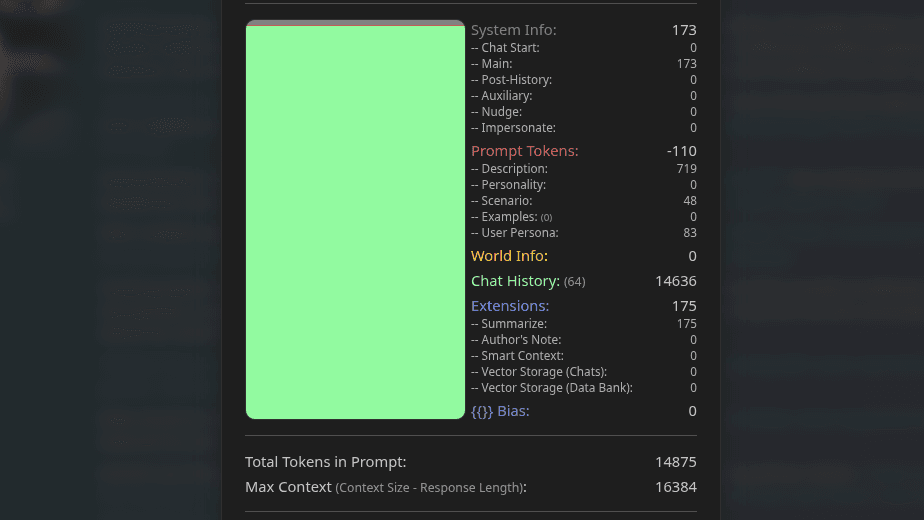

You can click on the Prompt icon on the most recent LLM response to see how many total tokens your chat has consumed. The Prompt Itemization gives you an idea of approximately how many messages it would take before the initial messages start dropping out of the context window.

You can click on the magic wand icon next to the frequency options to let SillyTavern set the optimal frequency based on your chat. For example, in our chat with 64 messages, where the chat history consumed 14,636 tokens (16,384 Context Size), SillyTavern recommended updating the summary every 60 messages.

Setting both update frequency options to 0 will disable automatic updates. If you decide to enable automatic summary updates, make sure you review the new summaries to ensure they include all important details and avoid unnecessary information.

Injection Template & Position

You can define how SillyTavern structures your summary and where it inserts it in the prompt. Depending on your chat or text completion preset and API, you may have to test if your chosen injection position works correctly.

For example, while using CherryBox 1.4 DeepSeek Preset and DeepSeek’s Official API, we noticed the summary prompt wasn’t injected when set to “After Main Prompt / Story String.” You can use the Prompt Itemization information to verify that SillyTavern successfully injects the summary into the prompt.

Summarized Messages And When To Manually Summarize

The number of messages the model can summarize depends on your Context Size. For example, if your Context Size is set to 8,192, the model can summarize about 15 to 20 messages.

The number may vary depending on the length of your messages, permanent tokens used by the character card, your persona, etc. Ideally, you should manually generate your first summary before the initial messages drop out of the context window.

You can click on the Prompt icon on the most recent LLM response to see how many total tokens your chat has consumed. The Prompt Itemization gives you an idea of when to manually generate your first summary.

How To Summarize Long Chats On SillyTavern

If you’ve exceeded your Context Size and haven’t generated a summary or want to generate better summaries for long chats, you can use SillyTavern’s /hide and /unhide commands to generate better, targeted summaries.

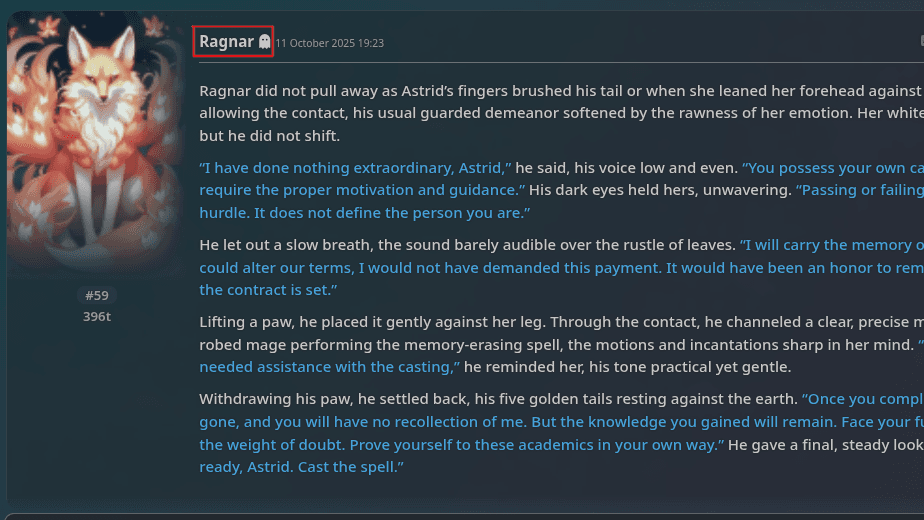

These commands hide and unhide one or more messages from the prompt, letting you control which messages remain in the context cache. Messages hidden from the prompt have a ghost icon next to your name or the character’s name.

For example, in our chat with 64 messages, we first used the /hide 30-64 command. The LLM could only access messages 1 to 29, and we manually generated a summary and saved it in a text editor.

Next, we used the command /unhide 30-64 first, and then /hide 1-29. The LLM could only access messages 30 to 64. We removed the previous summary before manually generating a new one and saved it in a text editor.

Since SillyTavern’s default summary prompt instructs the LLM to build upon any existing summary, it is important to remove the previous summary before generating a new one. Alternatively, you can modify the summary prompt.

Now we have two detailed summaries that we can merge and refine into a single detailed summary. We recommend breaking down your roleplay into chapters and using the /hide and /unhide commands to summarize messages in organized batches.

Manually generating summaries for long chats on SillyTavern in this manner gives you greater control over them and makes it easy to carry forward important details while removing unnecessary information.

Manual Summaries Using A Free AI Assistant

If generating detailed summaries in SillyTavern is tedious or your model isn’t capable of generating decent summaries, you can use a free AI assistant like DeepSeek, Gemini, or ChatGPT to help generate one.

Note: We do not recommend using AI assistants to generate summaries if your chat contains NSFW content. NSFW content isn’t limited to sexual material; it also includes gore, drug-related topics, extreme violence, and more.

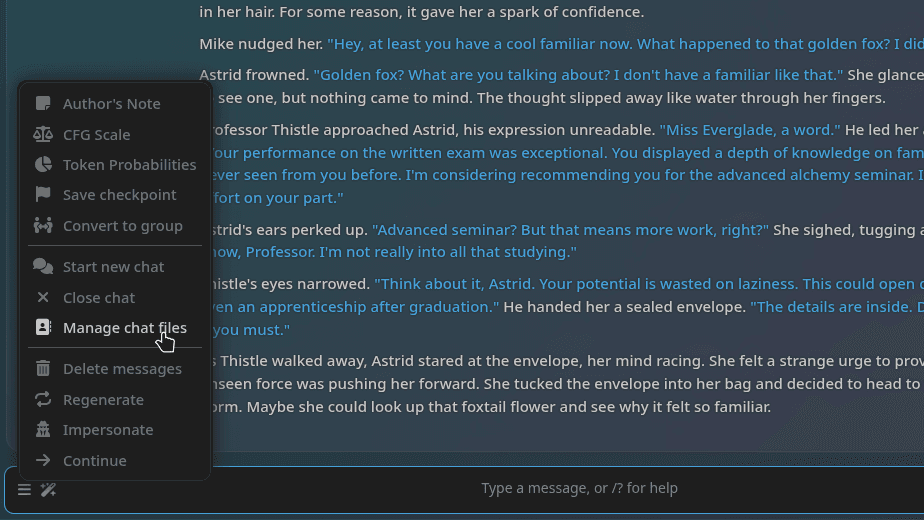

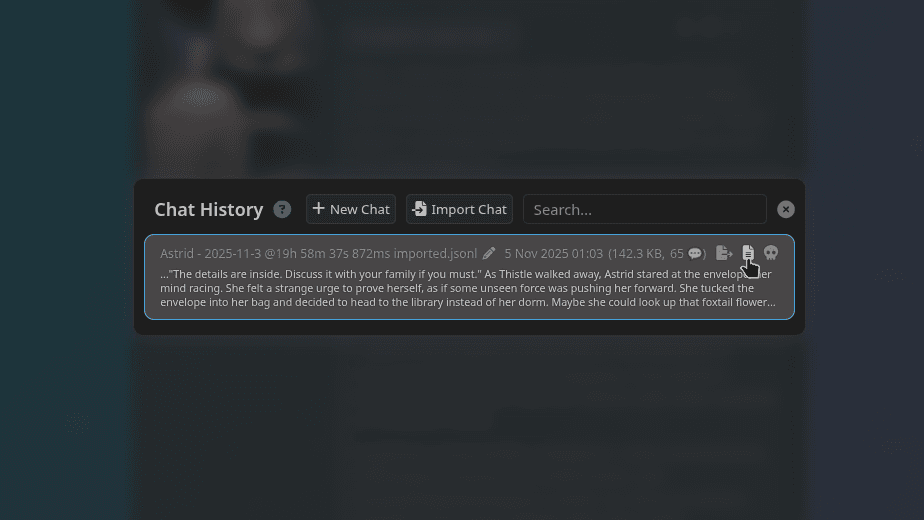

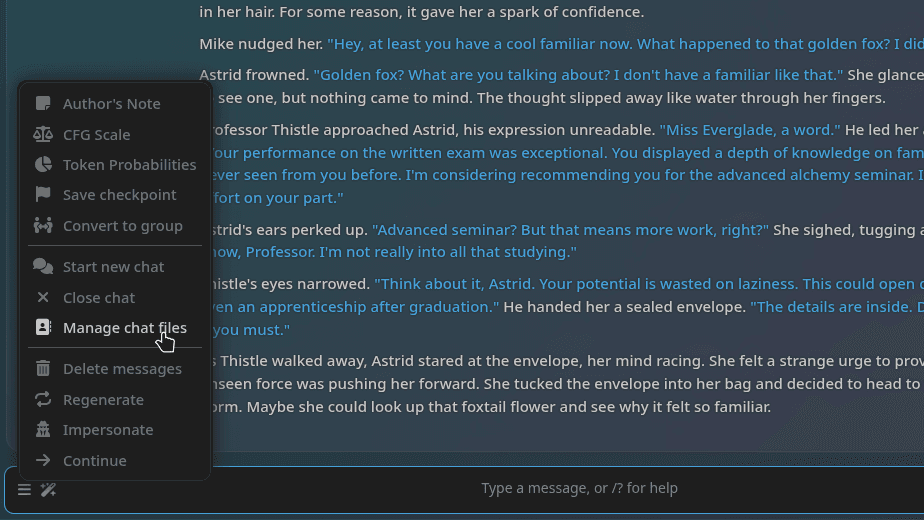

Click on the Chat Options button at the bottom left of the chat page, then select Manage chat files.

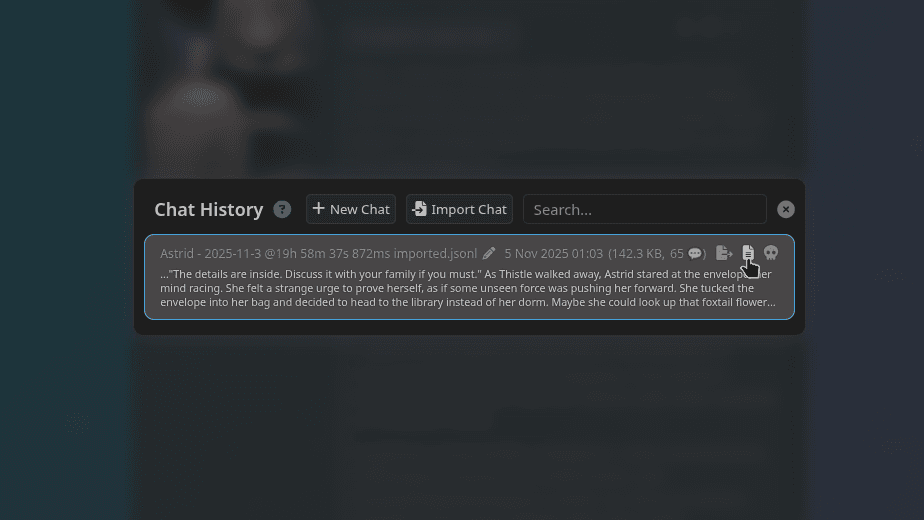

Export your chat as a text file. You can then upload this text file and ask DeepSeek, Gemini, or ChatGPT to generate a summary of your ongoing roleplay.

Optimize Context Cache Post Summarization

Once you’ve summarized your chat messages, you can optimize your context cache by removing them from the prompt. This helps you in several ways.

- When you reach your Context Size limit (for example: 16,384 Tokens), SillyTavern begins removing older chat messages from your prompt to make space for new ones. Your input prompt constantly changes, and you might not benefit from the input tokens cache mechanism.

- Roleplay messages include both narration and dialogue. Once summarized, narration and dialogue become unnecessary input tokens, wasting money and processing time. You don’t need previous messages to exist in your context cache word for word to maintain roleplay continuity.

- An optimized context cache with a solid summary is better than an information dump of 30-35 messages filled with narration that doesn’t affect the story’s future (for example: “he looks at her,” “he speaks with a steady voice,” etc.).

LLMs don’t pay equal attention to all information within the context window. They tend to have a bias towards information at the beginning and end of the prompt.

Retain the recent 5 to 8 messages and hide the rest using the /hide xx-xx command after summarizing them to optimize your context cache during long chats on SillyTavern. Make sure your summary includes all necessary information.

Think of the summary as your notes too, which you can refer to for past details as the story progresses. Maintaining summaries that help you will also help the LLM.

Use World Info (Lorebooks) For Long Chats On SillyTavern

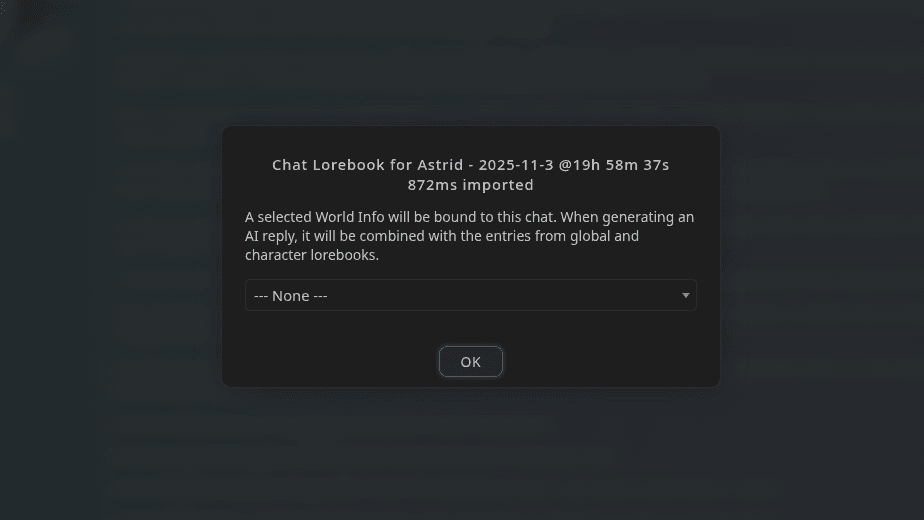

You can use World Info (Lorebooks) in SillyTavern for more than just lore and world-building. Create a lorebook and bind it to a chat using the book icon (Chat Lore button) on the character management tab. If you already have an existing lorebook bound to a chat, you can simply add entries to it.

You can create lorebook entries that include details about important events from your long chats on SillyTavern. While the Summarize extension helps you maintain a summary of your entire conversation, Lorebooks allow you to inject more detailed information about specific past events whenever required.

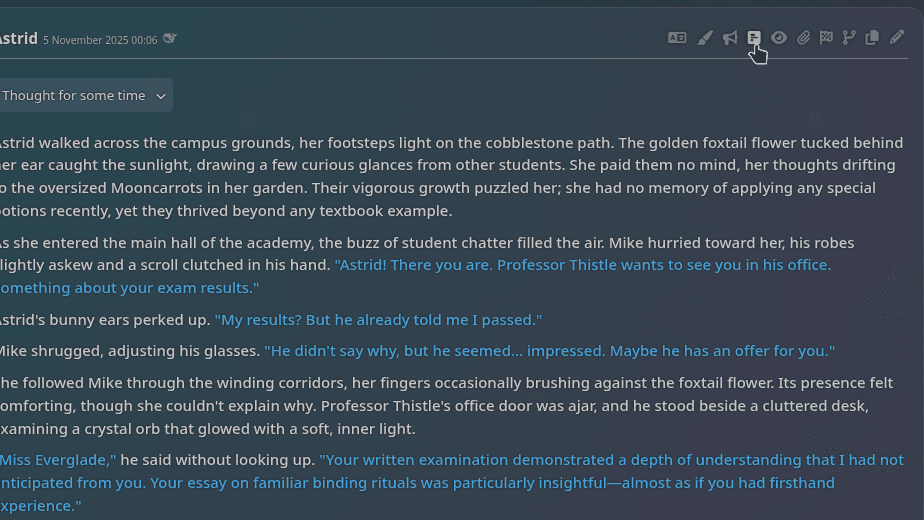

Let’s use our chat with Astrid as an example. We can create a lorebook entry for the blood contract between Astrid and Ragnar (messages #7 to #12) and insert it at the bottom of the prompt whenever triggered (at depth 0).

While the Summarize extension briefly mentions the contract and its terms, our Lorebook entry can be more detailed and include all the terms agreed upon between Astrid and Ragnar, as well as the serious nature of forming a blood contract with a war god.

Whenever we trigger the lorebook with its keywords, the LLM will receive detailed information about the contract, even during a long chat on SillyTavern with over 600 messages.

World Info Strategies

You can create lorebook entries containing detailed information about any past event you consider important.

- First meeting with notable NPCs in the world, such as royalty, other factions, kingdoms, enemies, etc.

- First visit to a new location, such as a keep, stronghold, kingdom, planet, etc.

- Relationship developments (allies, contracts and agreements, moments between you and the character like a first kiss, etc.)

- Wars, battles, or other significant moments.

- Conclusions to story arcs/chapters.

Inserting your lorebook entries at depth 0 works well with the input tokens cache mechanism. It doesn’t disrupt the entire prompt by causing a change higher up in the structure.

You can either create the lorebook entries manually or use the /hide xx-xx command to hide all unrelated messages and ask the LLM to generate a detailed summary of the remaining messages. Remember to delete all OOC messages and responses once done. Alternatively, you can also use free AI assistants for this task.

Click on the Chat Options button at the bottom left of the chat page, then select Manage chat files.

Export your chat as a text file. You can then upload this text file and ask DeepSeek, Gemini, or ChatGPT to generate a detailed summary for the relevant parts of the text file.

Note: We do not recommend using AI assistants to generate summaries if your chat contains NSFW content. NSFW content isn’t limited to sexual material; it also includes gore, drug-related topics, extreme violence, and more.

Think of lorebook entries for past events as sticky notes. You can create as many of them as you want, and these notes will help your LLM look up information related to a past event only when needed. It helps optimize your context cache and maintain memory and coherence in long chats on SillyTavern.

Extensions To Manage Long Chats On SillyTavern

If you don’t want to deal with the hassle of manually managing the Summarize extension or Lorebooks, you can use community-developed extensions to automate optimizing your context cache.

Qvink’s Message Summarize

Qvink’s SillyTavern Message Summarize extension is an alternative to the built-in Summarize extension.

- The extension summarizes messages individually, not all at once, which helps reduce inaccuracies and missing details.

- It maintains short-term memory that updates automatically over time, as well as long-term memories of messages you manually mark.

- The extension supports using a separate completion preset or connection profile for generating summaries. For example, you can use a small local model for roleplay and a larger model through OpenRouter for summaries.

- It automatically optimizes your context cache by hiding messages that have been summarized.

You can learn more about the extension and its features through its documentation.

Aiko Hanasaki’s Memory Books

Aiko Hanasaki’s SillyTavern Memory Books extension helps you automatically create “memories.”

- You mark the starting and ending messages (for example: 5-12), and the extension generates a summary and creates a lorebook entry.

- The extension provides multiple presets that let you generate detailed or brief summaries.

- The extension supports using different APIs and LLMs to generate memories through its profile management options.

- You can automate memory creation at set intervals.

- It automatically optimizes your context cache by hiding messages that have been summarized.

You can learn more about the extension and its features through its documentation.

How To Manage Long Chats On SillyTavern

To enjoy long chats on SillyTavern, you need to optimize your context cache to keep the LLM coherent and your experience immersive and engaging. The frontend provides several tools to help you optimize your context cache.

You can use the built-in Summarize extension to generate summaries using an LLM or manually enter them. To further optimize your context cache, you can hide summarized messages from your prompt to remove unnecessary tokens.

For a more immersive experience during long chats, you can create lorebook entries that detail important story developments and past events. These entries can include in-depth information, rather than just a brief summary that the Summarize extension provides.

To automate optimizing your context cache, you can use community-developed extensions like Qvink’s Message Summarize and Aiko Hanasaki’s Memory Books.